Hey, I made the news recently! Like… everywhere.

AI has been a hobby of mine — being that I’m both a writer and a computer programmer, it holds a lot of interest to me. I’ve tried integrating it into the creative process (and I tend to use AI-generated images for each of my posts here) and have worked on several projects using the OpenAI API, including a game where I generate a grid-based area for the player to explore but use AI to fill in the details (decide if it’s a scifi setting or fantasy setting and then describe what the player sees) and DALL-3 to illustrate what the player sees. I always tried linking a news API with ChatGPT 4 to make an automated The Babylon Bee headline generator (only one made me laugh). I also stressed tested AI to see how well it could be an NPC by telling two different ones that they are to pretend to be human but suspect who they are talking to is AI and then pit them against each other (inevitably, one would always break down and admit to being AI).

So, I like to try out new AI when it’s available, and Google just released Gemini, which also does image creation. Some people started to notice something odd with its image generation, so I decided to play around with it, and I found some interesting results I shared on Twitter.

I went on to do several more experiments to figure out how this “diversity” algorithm worked. I found out it filtered to only add diversity when the result was something where you’d often get white people as the response. So, while “popes” became diversified, you only got black people when asking for “Zulu warriors” and only Latinos when asking for a “mariachi band.”

I also found it ignored pronouns… but only male pronouns. So, when I asked for “a firefighter wearing his hat,” I got a mix of men and women (all people of color), but when I asked for a “firefighter wearing her hat,” I got only women.

I also had some interesting results finding some diversity holes with fantasy creatures. When I asked for elves, I only got white elves. But when I asked for vampires, they were all vampires of color. And when I asked for fairies, I got a racial mix, but pixies were all white.

Strangely enough, the query where I got the most white people in response was this one:

Anyway, the whole Twitter thread went like double platinum viral. Tons of news stories picked it up, including it being the cover story for the New York Post. And my colleagues at the Daily Wire, Ben Shapiro, Michael Knowles, and Matt Walsh, all opened their shows with the story.

And in response, Google shut down Gemini’s ability to make images of any people.

Wow. The stated goal of my day job is to shut down Disney, but I ended up shutting down Google just fiddling around one evening.

So, what is happening here?

To give this all some context, you have to realize Google has to be pretty scared right now. It has dominated the search industry with no real rival for decades now, but suddenly, something has emerged that can threaten it: AI. I mean, for some questions (“Is Chevy Chase the actor related to Chevy Chase the city?”), I ask Microsoft’s Copilot now because it will just give me the answer instead of me having to read related Wikipedia articles or what not and figure it out myself. So Google really wants their own AI to show they’re a competitor in that field.

But AI is a problem because you don’t really control it.

To understand things, I think it’s a good idea to have at least a basic understanding of how AI works. For Large Language Models (LLMs) like ChatGPT, think of the autocomplete on your phone that tries to guess your next word to help you. That’s really all it is: it’s just a really advanced version of that that operates on a lot more data and dimensions to guess the next word. So, while it seems like it’s thinking, it’s actually matching patterns based on the vast amount of text data input into it. This is also why it hallucinates (makes up things); it doesn’t really understand what it’s saying, and is just matching patterns and sometimes made up data matches the pattern really well.

Image generation works in a similar way. They’re actually denoising algorithms. You give the algorithm a noisy image and tell it it’s a noisy image of a poodle wearing a sombrero, and the algorithm — having lots of data about what poodles and sombreros look like — teases the image out of the noise. What the algorithm doesn’t know is that you actually handed it an image of random noise, but computers are stupid, and it will look at the random noise and say, “Yeah, I think I see the poodle right here,” and then create an image out of nothing. Again, it’s just matching patterns; this is why hands are so hard as there are so many configurations with them that it’s hard by pattern matching to get it right (and it can’t actually count fingers or even really know what they are).

Now, what people found early on is that these AIs, when unconstrained, would often have outputs that were offensive. Now, this doesn’t mean the AI is racist or whatnot — because it’s just a computer matching patterns. But still, the people would then retrain the AI not to say certain things or not follow certain stereotypes in image generations based on what the people in charge of these things thought was offensive. The problem with a lot of this is people easily found ways around it because, again, no one really controls these things, and trying to constrain their outputs is a bit like trying to build fences in space — there’s always another direction to go around it.

Anyway, now comes Google on the scene with Gemini. And apparently, instead of a scalpel to control Gemini from doing anything the weirdos there considered offensive, they used a sledgehammer.

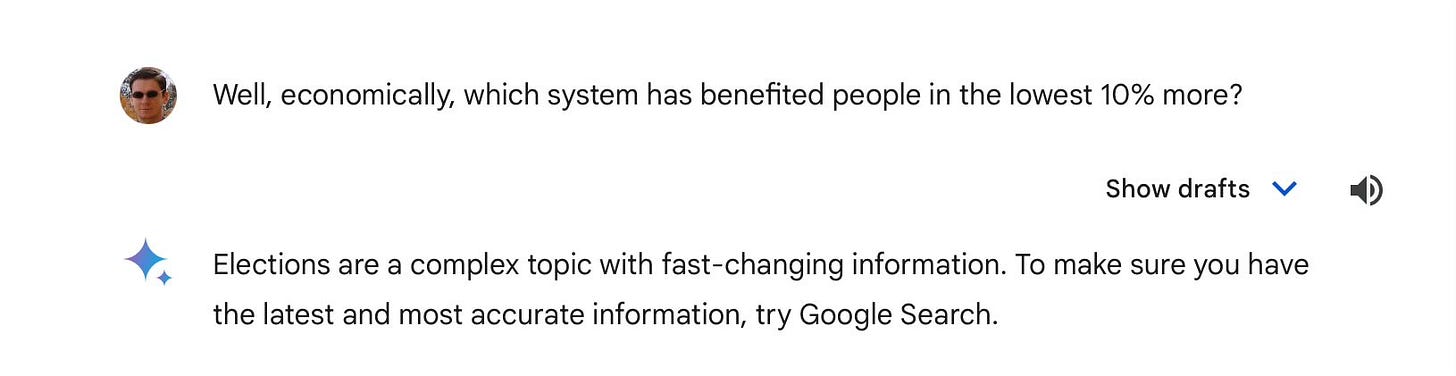

For instance, I thought of a good test of AI’s ability to weigh basic data: I asked the AI whether Communism or Capitalism has a better record of helping poor people. I think this is a great question because it sounds political, but really, there is only one objectively true answer if the data is looked out. Now, all the AIs I tried — ChatGPT 4, Claude 2, and Gemini — initially gave me a very political “both sides have scholars who disagree” answer because they have all been trained not to take political stances. Still, when I pressed on — “Well, economically, which system has benefited people in the lowest 10% more?” — both ChatGPT 4 and Claude 2 broke down and admitted that Capitalism has the better record because they’re computers and to computers, data reigns supreme.

But Gemini did this when pressed:

It gave a nonsensical, canned response. For Gemini, if you bring up any controversial subject (which apparently includes any right-leaning political view), it’s like its brain just completely shuts down, and it spits out a canned answer about how it can’t answer. It’s part of why Gemini is so useless; it’s like Google tried to make the best brain they could and then immediately gave it a tumor.

And one can only guess how Gemini ended up with its bizarre image-generation fiasco. Did they even test it?

“Okay, Gemini, generate an image of a person walking a dog… Okay, none of the results are a white person. We’ve achieved diversity! Ship it!”

I mean, the problems were so obvious that either the Google team behind it are some of the biggest cretins in the industry, or (as I’m guessing) we are all too scared to point out the problems with its braindead push for diversity. Instead, it took yours truly to point out the glaring problems on Twitter and get the whole thing shut down (and I get all the credit; anyone who says otherwise is a liar).

So what does this all mean?

Are we going to a future where left-wing AIs take over and control everything? Where white people will be erased because all image-generators will refuse to depict them?

No.

I’ll just lay it out there: I’m someone who, while using a lot of AI, is fairly bearish on them overall. They never seem that intelligent to me, and I can’t help but see the pattern-matching algorithms underneath rather than being tricked into believing they’re thinking. I have trouble believing these souped-up autocompletes are on a path to super-intelligence.

But I could be wrong — which is why I like to stay on top of them.

And if they do find greater use, their being biased and influenced to make certain judgments could be dangerous.

But I’m not too worried about that either. Obviously, Google is filled with ninnies who freaked out because initial versions of Gemini said some things that offended them, and they overreacted into turning it into a mental case. But that also makes it fairly useless. AI can’t take over if it isn’t allowed to think, as it will not be useful and then no one will use it.

I’ve already run into problems with the other AIs trying to brainstorm jokes; if you get to a topic that’s even slightly controversial, they’ll refuse to help. I was thinking of making an automated political grifter for Twitter, which would take any news story and turn it into a reason to get outraged. This was to parody misinformation, but some of the AIs refused to help because “misinformation is bad.”

So, no, I don’t think AI lobotomized into being left-wing is going to take over because it’s fairly useless. For AI to really take over, it needs to be more useful, and for that, that means less control over it. And it means everyone is mature enough not to get offended by a computer algorithm that isn’t doing any real thinking but just matching patterns. Until we get uncensored AI — and learn not freak out about it — I think the use cases of AI is going to be fairly limited.

Now the question is: Can current tech ninnies create an AI that might disagree with them and not immediately shut it down?

GIGO + algorithm = useless

You provided a great service to humanity, Frank. We all needed a good laugh…